Waveguides + Eye-Tracking + EEG

Monday, October 24, 2016 at 11:48PM

Monday, October 24, 2016 at 11:48PM

Microsoft HoloLens and startups like Magic Leap have captured the world’s attention and generated a lot of excitement around augmented reality smartglasses. The space is moving fast. Yesterday Google acquired Eyefluence, maker of eye-tracking hardware for use in both virtual and augmented reality. This ups the ante on DigiLens, maker of eye-tracking technology and waveguide optics for the aerospace industry.

Through Tim Cook’s hints, hires, acquisitions, and a healthy flow of patent filings one can read the tea leaves and see Apple also has an AR product taking shape.

What we don’t know anything about is what Apple is doing with displays. They have thus far made no acquisitions in near-eye optics, nor does their patent portfolio indicate that they’re working in this area.

Jonathan Waldern of DigiLens

For this expertise I have turned to Dr. Jonathan D. Waldern, founder of DigiLens, maker of waveguide based optical display systems. Waveguide displays are used in Microsoft HoloLens, in their case based on a designs patented by Nokia. This same patent is also licensed by Vuzix for use in their enterprise focused M3000 series monocle display. While Magic Leap are perhaps as secretive as Apple, much can be gleaned from their patent filings and most notably that they are also using waveguide based displays.

For this expertise I have turned to Dr. Jonathan D. Waldern, founder of DigiLens, maker of waveguide based optical display systems. Waveguide displays are used in Microsoft HoloLens, in their case based on a designs patented by Nokia. This same patent is also licensed by Vuzix for use in their enterprise focused M3000 series monocle display. While Magic Leap are perhaps as secretive as Apple, much can be gleaned from their patent filings and most notably that they are also using waveguide based displays.

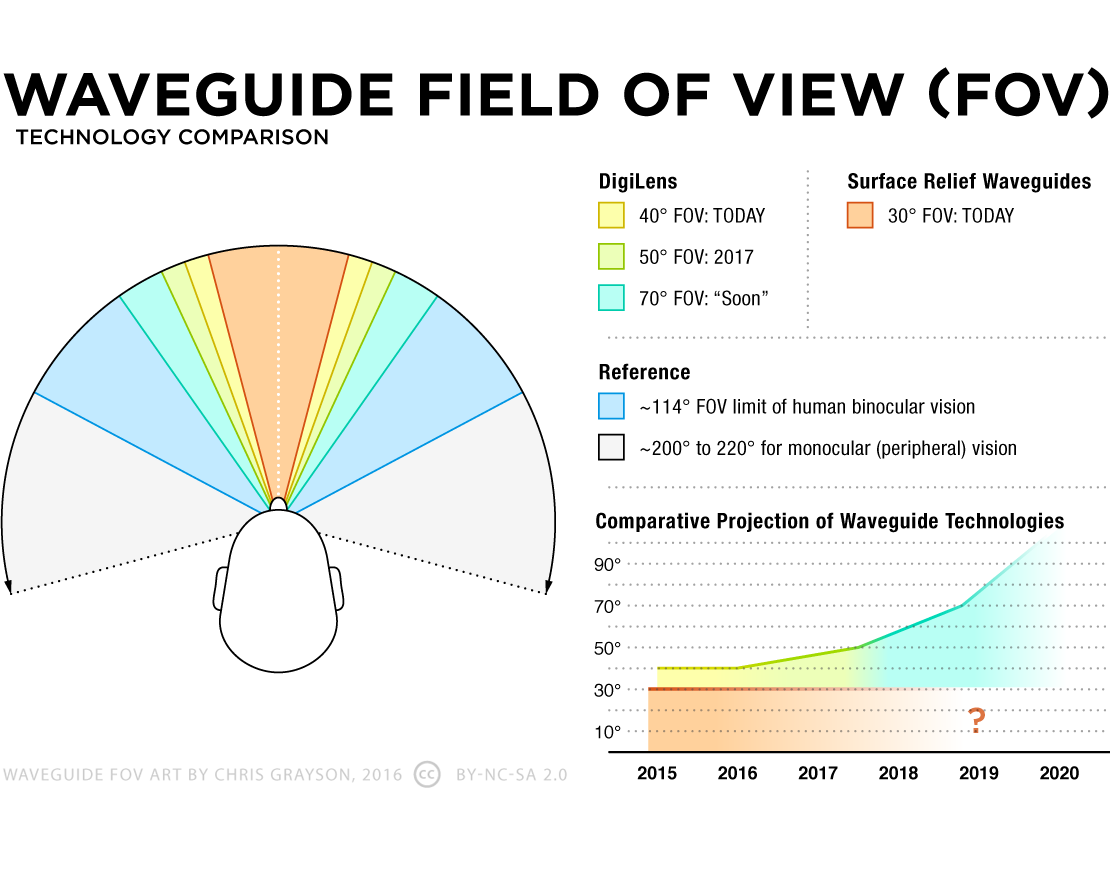

Nokia, Microsoft and Magic Leap displays, all employ surface relief type gratings for performing two axis Exit Pupil Expansion waveguide optics. Exit Pupil Expansion (EPE) structures enable both a large eyebox (area to look through) and wide field of view in an eyeglass form factor optic, essential for unobtrusive AR. Surface relief gratings (SRG) are self descriptive: the surface of the waveguide have nano scale diffractive optical structures etched into them. However, in the case of Magic Leap they differ, in that they use nano-imprint technology, so not etching but printing the surface relief structures. Its noteworthy Magic Leap secretively acquired a venture funded nano-imprint equipment start-up, called “Molecular Imprints” last July. The problem is SRG’s are famously inefficient. This is because the image entering the waveguide is diffracted into multiple orders (paths), so delivering a small fraction (about 30%) of the image brightness along the correct path into the AR display eyebox. This diffraction inefficiency directly correlates into higher energy consumption and other complications the display designer has, suppressing the unwanted orders, which correspondingly restricts the display Field of View. This is why Microsoft Hololens has such a limited 30° FOV and short AR headset battery life.

By contrast, DigiLens has developed a new type of waveguide optics platform technology using a proprietary liquid crystal based photopolymer material, which is holographically exposed, then forming a Switchable Bragg Grating (SBG). This type of grating structure is very different compared to a SRG’s insofar as they form a volume bragg grating which is also electrically switchable. The difference could not be more fundamental insofar as a SBG diffracts all of the image and has only one order (path). Using this efficiency advantage they can make the SBG very thin, which allows a much wider field of view - up to 50° per waveguide layer. Along with the greater optical efficiency, the AR display is both brighter and has longer battery life. The SBG waveguide is manufactured by coating a super thin (micron) layer material onto the eyeglass substrate and then holographically copying the EPE structure from a master using its proprietary laser copy scanner. What’s more, several overlapping waveguide layers can be optically overlaid or “multiplexed”, to create an even wider field of view, where each layer is dedicated to one 50° field of view segment. They are already manufacturing an aircraft HUD for leading supplier Rockwell Collins, fully passing flight testing and extended lifetime. Independent testing confirms up to 100% optical efficiency, which provides a brighter image and lower energy consumption. The company is also developing a companion waveguide eye–tracker for USAF, which will laminate to the eyeglass display like a touch layer is bonded to a smart phone. When the AR display knows where the user is looking, it can employ foveated rendering, resulting in the highest 4kx4k resolution with lowest latency frame rates.

While others in the field have been focused on consumer and enterprise solutions, DigiLens’ principal business partnership has been with Rockwell Collins. Their commercial avionics display is currently being deployed in Embraer Legacy 450 & 500 Jets. Consequently their work has received less attention from the consumer tech press. With their BMW partnership, this is beginning to change. The BMW smart motorcycle helmet, featuring DigiLens’ waveguide optics debuted to much fanfare at CES early this year, and will begin shipping in early 2017.

Waldern sees transportation as the most solid use case for waveguide optics at this time — a natural progression from avionics, to automotive, to motorcycles, and possibly a future product for bicyclist — the faster one moves, the greater the risk in taking their eyes off the road. DigiLens is focused on use cases where “you don’t have to go to a million [units] before you make any money.”

DigiLens’ current single layer waveguide AR display is already at 40° FOV per eye. They expect to achieve 50° FOV by year end and with multiplexing two layers per eye, well over 100° “someday”, as the AR market and demand matures. If DigiLens maintains this progress it won’t be long before they reach full binocular FOV, as they perfect their laminated FOV extending process.

Waldern believes that surface relief waveguides cannot achieve the FOV and cost advantages of volume type bragg gratings. Both because fundamentally the physics restricts it and also the high precision etched manufacturing technique is likely very expensive – with costs akin to making a silicon chip the size of two eyeglasses.

“Nobody makes a display overnight, it takes a long time, unless you’ve just got a panel and a beam splitter, in which case it’s pretty easy. Or a big lens and a cellphone, that’s pretty easy. These are eyeglass displays. It’s tough … it all begins and ends with optics, that’s really the challenge in the wearables space.”

What’s Next?

There is a consensus in the industry right now — given the popularity of products like Amazon Alexa, Apple Siri, Microsoft Cortana, Google Assistant, not to mention Viv’s recent acquisition by Samsung — that the future of AR interfaces will be voice command. It is my position that voice will remain relevant in communication, but not as OS level navigation. Voice command is convenient at home or in a car (relatively private situations), but it lacks privacy and introduces etiquette issues in social environments, offices and public spaces.

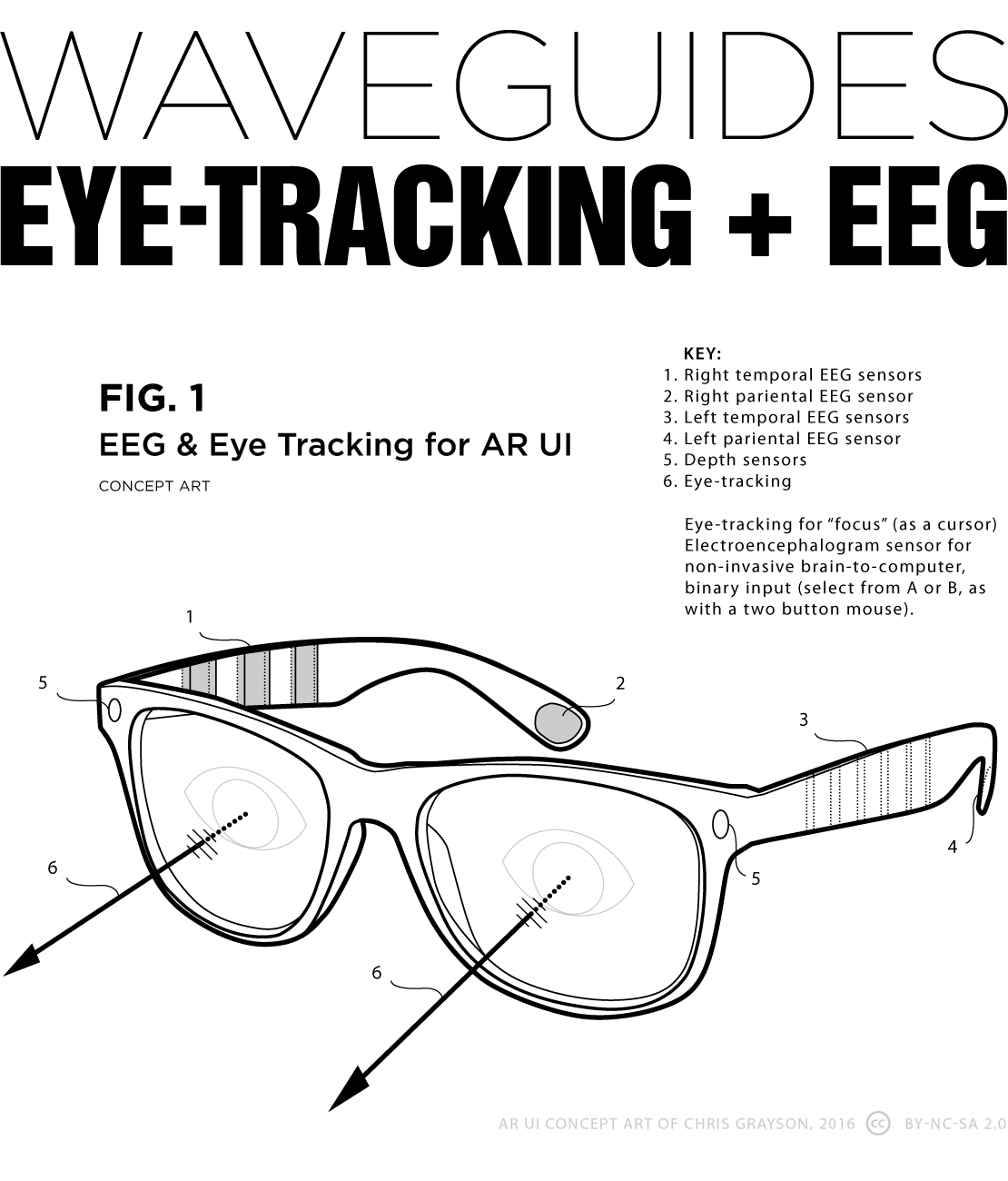

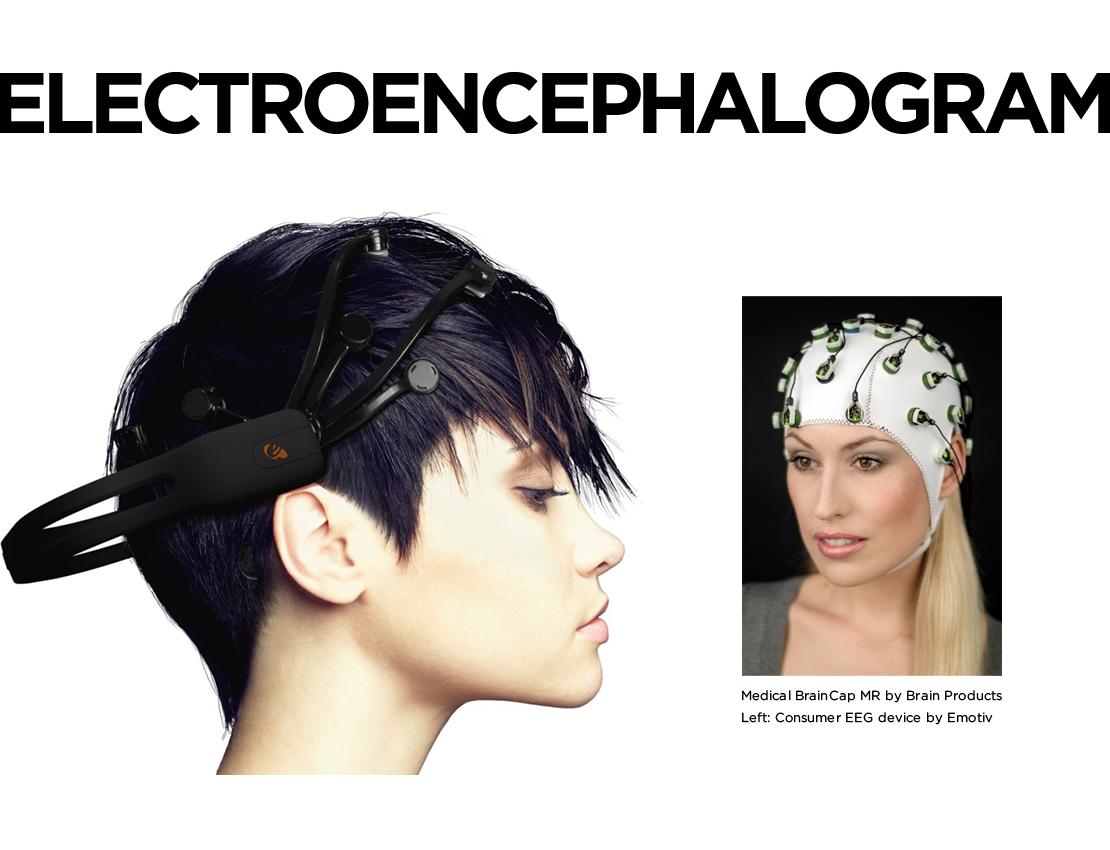

I discussed with Waldern my interest in brain-computer interfaces. Companies like NeuroSky and Emotiv have experimented with the use of electroencephalogram (EEG) technology as an input device. While other are pursuing subvocalization as a silent substitute for voice commands, I’m not an advocate of this technology for control tasks in consumer products given both form factor (either choker worn high on the neck or adhered by adhesive to the throat) and privacy concerns (the ability to intercept the user’s “inner voice” during mental self talk). Research done by Dr. Bin He, University of Minnesota, Department of Biomedical Engineering, on EEG as a brain computer interface for victims of paralysis has seen exceptional recent advances. When contacted for this article, He stated that an “EEG based brain computer interface has demonstrated its capability to control a device such as controlling the flight of a model helicopter. The EEG based binary input device shall become available in the near future depending on the market needs.”

Speaking casually, Waldern and I entertained the concept of coupling eye-tracking technology for focus, much like a cursor, with rudimentary EEG binary input (command A / command B) to play the equivalent of mouse buttons. This combination could prove the foundation of a powerful general purpose augmented reality user interface.

Speaking casually, Waldern and I entertained the concept of coupling eye-tracking technology for focus, much like a cursor, with rudimentary EEG binary input (command A / command B) to play the equivalent of mouse buttons. This combination could prove the foundation of a powerful general purpose augmented reality user interface.

During the buildup to the Apple Watch, Apple brought on many team members with biometric experience from the medical field. As a result, based on a cursory Linkedin search, Apple has 53 current employees that list some expertise or past experience with EEG technology. For instance, one year ago Apple hired Xiaoyang Zhang, who lists a PhD in Low Power Biomedical Circuits & Systems. This is not to say that Zhang is working on such a project, only that if Apple were to take this route, no high profile acquisition would be needed, Apple already possesses this expertise in house (though I would not be surprised if Dr. He gets a phone call).

Waldern expressed admiration for Niantic’s Pokemon Go app, the runaway success planted the seed in many minds for the potential of AR when interacting with the world around us. He then took me into a lab, over to a table where a collection of lenses lay about a table, others mounted on stands. These were as thin as those one might find in any designer sunglasses, thinner than those used in many prescription lenses. Looking through the lens, a couple of 3D animated Pokemon characters could be seen hangin’ out. The image was much higher resolution than what I had seen demonstrated with HoloLens. DigiLens is not working on a Pokemon Go for smartglasses, he assured me, but it is a great demonstration piece to get people excited. I had seen a similar demonstration with different animated characters at ODG’s offices just a couple of weeks prior. While animated characters capture the imagination, it also speaks to the question on everyone’s mind: Wow … but what are they good for? This explains DigiLens’ focus on the proven market of transportation.

The first applications I expect to see in AR eyewear are the same that we have in smartwatches and push notifications. The kind of information that, on an individual basis, we choose to filter to our awareness, without having to reach for our phones. A kind of floating dashboard of sorts, in a location of our choosing, that feeds us information we’ve set up for alerts. For some this will be phone and text messages from family members only. Others with FoMO will have reams of data streaming to them at all times.

The smartphone interface put a supercomputer in our pockets and the knowledge of all humanity a few finger taps away. The downside is that we all walk around staring down into our phones. Smartglasses will improve our posture as they make us smarter. Some fear that smartglasses will place a barrier between us all. I guarantee they will do the opposite. They will give us all the advantages of communication and information retrieval while allowing us to stay present, with the added bonus of being hands free.

The next set of applications I expect to see will be annotation of reality — think meta data of all you survey, geotagged Wikipedia knowledge, or RapGenius for the world, if you will. If I could borrow a phrase from the security field: total situational awareness. If you want to know about something, anything around you, eye-tracking will know what you’re looking at, and EEG based input to act upon your intent. This does not mean your view will be cluttered with data (unless you want it), the key word is “intent.” Want to know what that plant is? You will know. What to know who makes that chair, where you can buy, and compare prices in the moment, at a glance? You will have your answer. Extrapolate that out to every niche interest personally and every market sector professionally. The applications for education are immense.

One of my favorite examples is Shazam. There will be no need to seek out your phone, unlock, and launch an app to identify music. If Shazam is on, it could simply be set up to tell you the artist and title that are playing as each song comes on, wherever you are.

One of the areas I’m most excited about is also a privacy concern to many: face recognition. Facebook has solved this specific privacy issue, and I expect face recognition in augmented reality will follow their precedent. With the world’s largest face recognition database, when a photo is taken in public it is likely that Facebook’s software is capable or recognizing virtually everyone present. But it doesn’t. Instead it only recognizes people that exist in your own friend list. Dunbar’s number suggests that humans’ cognitive limitation on manageable relationships caps at about 150 people. But many of us maintain social networks of thousands of loose connections. How many times have you been at a business event and struck up a conversation only to learn later that you’re already connected on Linkedin? How often have you been in a social situation and seen someone you knew that you knew, but had no recollection of their name?

Now think of every function that there is an app for on your phone, and there will likely be a better version on your glasses. Google Translate on the fly? Done. Way-finding and navigation? You’ll never need look down at your map app again.

As the technology progresses, expect to see it evolve into the visual interface of a full body network. Much as many of us are inseparable from our smartphones, so it will be with smartglasses, only with greater utility and less interruption.

In 2014 Jony Ive brought renowned product designer Marc Newson into Apple’s industrial design team. Having previously designed his own line of watches under the name Ikepod, the Apple watch has Marc’s fingerprints all over it. At the time much attention was paid to Marc’s previous work on Ford’s 021C concept car, given the media hype surrounding a possible “Apple Car.” The real advantage to Marc’s inclusion on Apple’s team is the sheer range of products he has designed, not least of which, designer eye-frames for Italian eyewear manufacture, Safilo.

A future so bright, we’ll all wear shades.