Moral Machine

Friday, October 14, 2016 at 1:04AM

Friday, October 14, 2016 at 1:04AM

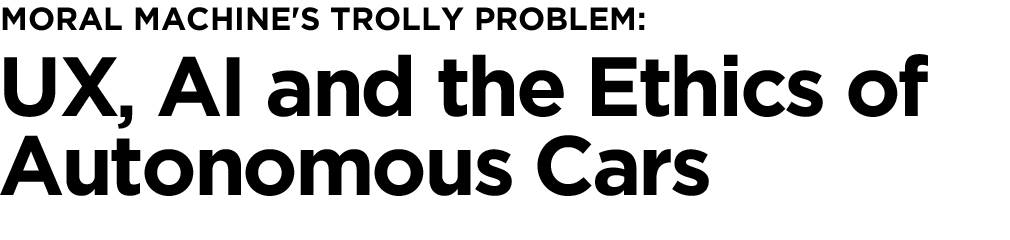

Moral Machine is a ethics based game developed by a team at MIT. It attempts to expand upon the “Trolley Problem” as applied to AI decision making for autonomous vehicles (hint: They’re doing it wrong.).

In its simplest form, the Trolley Problem presents the reader with a dilemma: You are standing at a fork in a railroad track with the railroad switch within your reach. There are several individuals on the track that face certain death should the train continue on course. It is within your means to change the course of the train, steering it onto an alternate track where it will only hit one individual. With inaction, you will witness multiple deaths that you could have averted. But by intervening, you cause the death of another stranger, effectively committing murder. This single individual is often portrayed as an “undesirable.” The dilemma is typically elaborated on by altering various factors: What if rather than an undesirable, it was a beautiful woman, a wealthy man, or a generally upstanding citizen in the community? What if it was a baby, and the larger group were elderly men? What if the single individual on the track witnesses you pulling the level that causes his or her death? Etc. A classic moral dilemma.

Applying the Trolly Problem and other moral dilemmas to the decision making of artificial intelligence is a common exercise. The creators of Moral Machine present the user with a scenario where an autonomous vehicle’s brakes have gone out and the player must decide various course of action in which the death or injury of some combination of individuals are unavoidable.

As a model for decision making in self driving cars, I believe Moral Machine’s game dynamics are fundamentally flawed. None-the-less it has initiated enough debate among myself and my colleagues, that I will share here my own conclusions.

The intrinsic flaw in the exercise is that it assumes an equivalent relationship between occupants of the vehicle and non-occupants of the vehicle; then on the back-end it attempts to apply a social psychology filter to the game player’s decision making. It approaches the problem with all the wrong assumptions, and then uses the data collected to measure factors an autonomous car should never take into consideration, and likely won’t be available to the AI anyway. An autonomous car doesn’t know which pedestrian is a doctor vs a bank robber — such unnecessary anthropomorphism is not germane to the problem the AI should be solving for.

So I played the game, you can play it too.

-

My own methodology when I played the game:

- Preserve the life of the occupants of the vehicle as priority #1.

- Minimize loss of life overall.

- Prioritize human life (animals were included)

- When placed with a decision of equal outcome in death-toll, do not factor it into the equation — no one human life should be prioritized over any other human life, hence do not make a “path correction” to avoid killing 5 people in group A in order to kill a different 5 people group B.

But this is all a distraction.

-

In a real world scenario there are two paramount factors excluded that from the game dynamic:

- Warning on the part of the vehicle.

- Agency on the part of pedestrians.

And the fatal flaw, were this more than just a thought experiment, but dare be applied to autonomous vehicular decision making, is the false equivalency of the relationship to the vehicle between the occupants and any other actors.

Because the occupants are INSIDE the vehicle they therefore lack agency in the outcome. They are solely at the mercy of the AI, while the AI is their custodian.

However because those outside the vehicle have their own agency, their actions are unknown. The AI can factor for the statistical likelihood of the other human’s behavior, even apply some flocking algorithms for groups, but once multiple sentient beings with agency begin making decisions of their own based on an approaching car, the car cannot know with certainty what they’re going to do. What it can do for pedestrians is give warning — whether by honking, flashing lights, or even announcing that the vehicle has lost braking power … not to mention other accident aversion tactics like downshifting, etc. (This will be an extreme outlier scenario, in any case).

When an autonomous car sees another autonomous car, they will know exactly what the behavior of the other autonomous car will be: when one machine interacts with another machine they will ideally be in communication and wirelessly signal to one another their intent. Even in the event they are not in communication, they will know if the approaching vehicle is Model 6 of Brand X, and can immediately reference the known behavior of the other vehicle. This is a big part of what makes autonomous cars incredibly safe.

In this regard, they will save more human lives, than human drivers, even if early versions have some initial failings (especially in early models — every “kill” will make headlines and be reported upon outsized to the exposure of human auto accidents, which are only reported on locally. This is an obvious PR problem that all players in this space are surely keenly aware of.).

Moral Machine initiated a debate among friends. Given the way the game frames the debate, it resulted in a conversation revolving around whose lives the vehicles should prioritize for. I argued aggressively that there are only two groups, those inside the vehicle and those not. My ethical position rests upon the custodial relationship between passenger and vehicle that does not exist between vehicle and pedestrian. I ultimately arrived at an order of priorities:

- Protect the occupants.

- Protect others provided it doesn’t violate rule 1.

- Protect animals provided it doesn’t violate rules 1 & 2.

- Operate within the law, provided it doesn’t violate rules 1-3.

- Avoid property damage, provided it doesn’t violate any other rules.

Call it Grayson’s Law of Autonomous Automotive AI.

Others have protested my decision under the argument that government authorities won’t certify an automobile as roadworthy if it doesn’t prioritize “law” above all else. This argument is absurd. Governing bodies don’t certify the code, they certify by outcome: as long as self driving cars are safer and have lower fatalities than human drivers — they will, exceptionally so — then they will be allowed on the road.

I’m confident the industry will take this same route. At the time of this debate, initiated by friend, Robert Tercek, there was a lot of pushback on my prioritizing passengers over pedestrians. Make what you will of the ethics, this is an argument that will be decided by the market. If automotive Brand A makes an autonomous car that prioritizes the lives of pedestrians and automotive Brand B makes a car that prioritizes the lives of occupants, I’ve got a stock tip for you.

I’m confident the industry will take this same route. At the time of this debate, initiated by friend, Robert Tercek, there was a lot of pushback on my prioritizing passengers over pedestrians. Make what you will of the ethics, this is an argument that will be decided by the market. If automotive Brand A makes an autonomous car that prioritizes the lives of pedestrians and automotive Brand B makes a car that prioritizes the lives of occupants, I’ve got a stock tip for you.

Update 1

At the time of publishing, Mercedes Benz made headlines by announcing that, in the event of an accident, their autonomous car prototype is programmed to prioritize the life of the occupants over those of pedestrians. I doubt their is anyone involved in the manufacture of autonomous vehicles lining up to hire the members of Moral Machine. If so, I know a good stock to short.

At the time of publishing, Mercedes Benz made headlines by announcing that, in the event of an accident, their autonomous car prototype is programmed to prioritize the life of the occupants over those of pedestrians. I doubt their is anyone involved in the manufacture of autonomous vehicles lining up to hire the members of Moral Machine. If so, I know a good stock to short.

Update 2

On October 20th Tesla released this video of their Level 5 self driving car: